Saving Every Prompt Doesn’t Make You(r) Team Smarter

Why dumping every AI prompt into a library slows your team down and what to build instead.

I keep seeing this advice pop up on LinkedIn and in AI Slack channels:

“Log all your AI prompts. Build a prompt library. Share it with the team.”

Sounds efficient. Sounds like a productivity hack. But here’s how it usually plays out:

You end up with a graveyard of half-baked prompts nobody trusts.

The library grows so big no one bothers to search it.

People stop experimenting because they think “the right prompt” already exists somewhere in the archive.

It’s like collecting recipes but never cooking and eventually you just have a Pinterest board of meals you’ve never tasted. (Guilty.)

Why “Saving Everything” Feels Safe but Backfires

The biggest misconception is treating prompts like standard operating procedures (SOPs). SOPs work when the process doesn’t change. AI doesn’t work that way.

Prompting isn’t a one-time “write it and reuse it” skill, it’s an active, adaptive conversation.

Models change. A prompt that worked perfectly on GPT-4 in March might behave differently after a July update.

Goals shift. The tone, depth, or creativity you need today may not match what you needed last quarter.

Context matters. The same prompt in different projects, with different data, can yield wildly different outputs.

When teams lean too heavily on old prompts, they forget prompting is a living skill. And a stale skill is a useless skill.

What Actually Builds AI Competence

If you want you or your team to get better at using AI not just faster at copy-pasting, focus on these three key skills:

Knowing when to prompt and when to stop. Some tasks are faster done manually or need more human judgment than AI can provide.

Rapid refinement. The magic isn’t in the first prompt, it’s in how quickly you can adjust and get the output you need.

Understanding AI’s strengths and weaknesses. When you know where the model shines, you stop forcing it into jobs it’s not suited for.

This shifts prompting from a static lookup to an active thinking process. Not every task needs AI.

Build Feedback Loops Into the Workflow

The fastest way to make AI work better for your team isn’t saving more prompts, it’s building feedback loops.

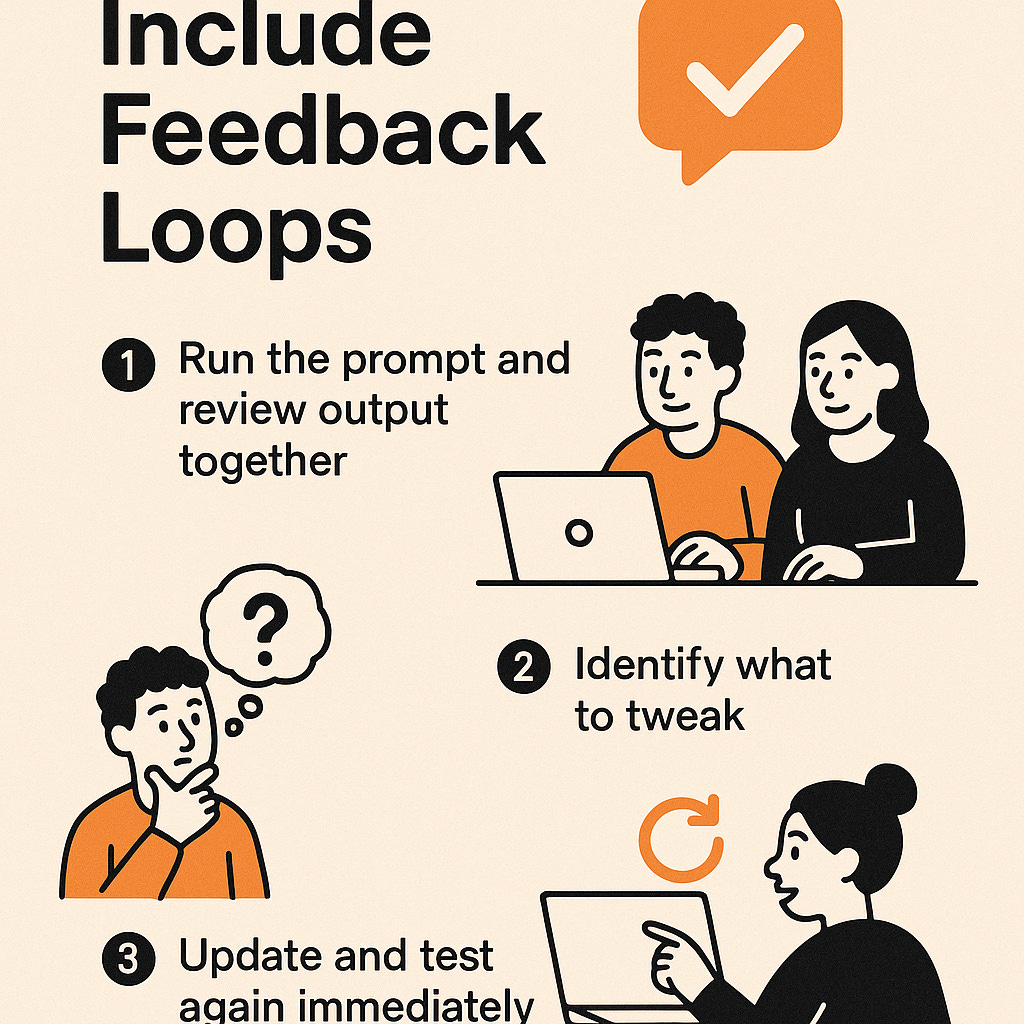

A good feedback loop has three stages:

Run the prompt and review the output together. Go beyond “does it look fine?” and check whether it meets the actual brief.

Identify what to tweak. Was the instruction unclear? Too broad? The wrong tone?

Update and test again immediately. Waiting until “later” kills that momentum, live tweak-and-test cycles build instincts fast.

To make this part of your workflow:

Hold short, focused review sessions to work on AI outputs together.

Keep version histories so you can see how prompts evolve.

Capture learnings in plain language (“This version works for short-form marketing copy, but not for long-form analysis” etc).

The value isn’t in ending up with a “perfect prompt” and it should never be. It’s in sharpening you or your team’s ability to diagnose and improve outputs in real time.

Model Adaptive Thinking

Set the tone by questioning whether an existing prompt is truly the best fit. Recognition should go to improvements and refinements, not just “finding” something in the archive.

The Alternative to a Giant Prompt Graveyard

Instead of dumping every prompt into a shared folder, build a living collection:

A shortlist of 10 actively maintained prompts. Review and replace them regularly.

Annotations. Record why it worked and when to use it.

Live training sessions. Test prompts together, edit in real time, and see how small tweaks change the output.

One is a stagnant archive. The other is an evolving skill base.

The Role of Cognitive Load on Humans

Working memory has limits. Large, unstructured repositories increase search time and reduce actual application. Once a prompt library grows past 10–15 active entries, it becomes harder to navigate than to simply start fresh.

Feedback loops solve this by externalizing the cognitive load: the team works together to improve prompts in real time, instead of leaving every individual to figure it out alone.

Limitations on Feedback loops

Feedback loops require consistent participation and facilitation. Without discipline, they can drift into unfocused experimentation with no record of progress. In compliance-heavy industries, prompt archiving may still be necessary though. But feedback cycles can be layered on top to keep skills sharp.

Measuring Success

To evaluate whether feedback loops outperform prompt libraries, here are some metrics you ccan track:

Output Quality Scores: Subjective ratings or client satisfaction surveys before and after implementation. [This is hard to score, in my experience]

Speed to Output: Time from task assignment to final AI-assisted deliverable (should include all the iterations), and time to the final output

Prompt Modification Rate: Frequency with which prompts are updated, indicating active engagement.

Reuse Rate: Percentage of prompts from the active list used in the past month.

To end,

Prompt libraries might seem like a neat way to make AI use consistent, but in reality they often create more clutter than clarity. Their static nature is out of step with the way AI actually works which is more fluid, conversational, and constantly shifting. Relying on old prompts can lock teams into stale/ dated thinking.

Feedback loops, on the other hand, keep skills fresh. They turn prompting into an active practice where people can learn by testing, refining, and sharing in real time. Over time, this approach not only keeps pace with changes in AI models, it builds the team’s collective instincts. This also makes it more collaborative so everyone the team learn and experiement together.

A small, annotated set of prompts, updated regularly and backed by live collaborative refinement, beats a massive archive every time. When you track versions, capture why something worked, and practice together, AI stops being a tool you “look up” and becomes a capability you truly own.

About the author:

Sahan Rao is a marketer and AI advisor to SMBs with more than 15 years of experience leading growth, go-to-market, and lifecycle campaigns across SaaS, finance, and tech. As the founder of LeadAi Solutions, she helps SMBs design smarter AI-powered processes in marketing, sales, and customer experience by bringing clarity to operations, agility to creative work, and ethical automation to every solution.

This is sounds like a very workable and efficient alternative to maintaining a huge library of prompts!